The last decade has seen remarkable improvements in the ability of computers to understand the world around them. Photo software automatically recognizes people's faces. Smartphones transcribe spoken words into text. Self-driving cars recognize objects on the road and avoid hitting them.

Underlying these breakthroughs is an artificial intelligence technique called deep learning. Deep learning is based on neural networks, a type of data structure loosely inspired by networks of biological neurons. Neural networks are organized in layers, with inputs from one layer connected to outputs from the next layer.

Computer scientists have been experimenting with neural networks since the 1950s. But two big breakthroughs—one in 1986, the other in 2012—laid the foundation for today's vast deep learning industry. The 2012 breakthrough—the deep learning revolution—was the discovery that we can get dramatically better performance out of neural networks with not just a few layers but with many. That discovery was made possible thanks to the growing amount of both data and computing power that had become available by 2012.

This feature offers a primer on neural networks. We'll explain what neural networks are, how they work, and where they came from. And we'll explore why—despite many decades of previous research—neural networks have only really come into their own since 2012.

This is the first in a multi-part series on machine learning—in future weeks we'll take a closer look at the hardware powering machine learning, examine how neural networks have enabled the rise of deep fakes, and much more.

Neural networks date back to the 1950s

Neural networks are an old idea—at least by the standards of computer science. Way back in 1957, Cornell University's Frank Rosenblatt published a report describing an early conception of neural networks called a perceptron. In 1958, with support from the US Navy, he built a primitive system that could analyze a 20-by-20 image and recognize simple geometric shapes.

Rosenblatt's main objective wasn't to build a practical system for classifying images. Rather, he was trying to gain insights about the human brain by building computer systems organized in a brain-like way. But the concept garnered some over-the-top enthusiasm.

"The Navy revealed the embryo of an electronic computer today that it expects will be able to walk, talk, see, write, reproduce itself and be conscious of its existence," The New York Times reported.

Fundamentally, each neuron in a neural network is just a mathematical function. Each neuron computes a weighted average of its inputs—the larger an input's weight, the more that input affects the neuron's output. This weighted average is then fed into a non-linear function called an activation function—a step that enables neural networks to model complex non-linear phenomena.

The power of Rosenblatt's early perceptron experiments—and of neural networks more generally—comes from their capacity to "learn" from examples. A neural network is trained by adjusting neuron input weights based on the network's performance on example inputs. If the network classifies an image correctly, weights contributing to the correct answer are increased, while other weights are decreased. If the network misclassifies an image, the weights are adjusted in the opposite direction.

This procedure allowed early neural networks to "learn" in a way that superficially resembled the behavior of the human nervous system. The approach enjoyed a decade of hype in the 1960s. But then an influential 1969 book by computer scientists Marvin Minsky and Seymour Papert demonstrated that these early neural networks had significant limitations.

Rosenblatt's early neural networks only had one or two trainable layers. Minsky and Papert showed that such simple networks are mathematically incapable of modeling complex real-world phenomena.

In principle, deeper neural networks were more versatile. But deeper networks would have strained the meager computing resources available at the time. More importantly, no one had developed an efficient algorithm to train deep neural networks. The simple hill-climbing algorithms used in the first neural networks didn't scale for deeper networks.

As a result, neural networks fell out of favor in the 1970s and early 1980s—part of that era's "AI winter."

A breakthrough algorithm

The fortunes of neural networks were revived by a famous 1986 paper that introduced the concept of backpropagation, a practical method to train deep neural networks.

Suppose you're an engineer at a fictional software company who has been assigned to create an app to determine whether or not an image contains a hot dog. You start with a randomly initialized neural network that takes an image as an input and outputs a value between 0 and 1—with a 1 meaning "hot dog" and a 0 meaning "not a hot dog."

To train the network, you assemble thousands of images, each with a label indicating whether or not the image contains a hot dog. You feed the first image—which happens to contain a hot dog—into the neural network. It produces an output value of 0.07—indicating no hot dog. That's the wrong answer; the network should have produced a value close to 1.

The goal of the backpropagation algorithm is to adjust input weights so that the network will produce a higher value if it is shown this picture again—and, hopefully, other images containing hot dogs. To do this, the backpropagation algorithm starts by examining the inputs to the neuron in the output layer. Each input value has a weight variable. The backpropagation algorithm will adjust each weight in a direction that would have produced a higher value. The larger an input's value, the more its weight gets increased.

So far I've just described a simple hill-climbing approach that would have been familiar to researchers in the 1960s. The breakthrough of backpropagation was the next step: the algorithm uses partial derivatives to apportion "blame" for the wrong output among the neuron's inputs. The algorithm computes how the final neuron's output would have been affected by a small change in each input value—and whether the change would have pushed the result closer to the right answer, or away from it.

The result is a set of error values for each neuron in the second-to-last layer—essentially, a signal estimating whether each neuron's value was too high or too low. The algorithm then repeats the adjustment process for these new neurons in the second layer. For each neuron, it makes small changes to the input weights to nudge the network closer to the correct answer.

Then, once again, the algorithm uses partial derivatives to compute how the value of each input to the second-to-last layer contributed to the errors in that layer's output—and propagates the errors back to the third-to-last layer, where the process repeats once more.

I've just given a simplified summary of how backpropagation works. If you want the full mathematically rigorous details, I recommend Michael Nielsen's book on the topic. For our purposes, the key point is that backpropagation radically expanded the scope of trainable neural networks. People were no longer limited to simple networks with one or two layers. They could build networks with five, ten, or fifty layers, and these networks could have arbitrarily complex internal structures.

The invention of backpropagation launched a second neural networks boom that began producing practical results. In 1998, a group of AT&T researchers showed how to use neural networks to recognize hand-written digits, allowing automated check processing.

"The main message of this paper is that better pattern recognition systems can be built by relying more on automatic learning and less on hand-designed heuristics," the authors wrote.

Still, at this phase neural networks were just one of many techniques in the toolboxes of machine learning researchers. When I took a course on artificial intelligence as a grad student in 2008, neural networks were listed as just one of nine machine learning algorithms we could choose to implement for our final assignment. But deep learning was about to eclipse other techniques.

Big data shows the power of deep learning

Backpropagation made deeper networks more computationally tractable, but those deeper networks still required more computing resources than shallower networks. Research results in the 1990s and 2000s often suggested that there were diminishing returns to making neural networks more complex.

Then a famous 2012 paper—which described a neural network dubbed AlexNet after lead researcher Alex Krizhevsky—transformed people's thinking. Dramatically deeper networks could deliver breakthrough performance but only if they were combined with ample computing power and lots and lots of data.

AlexNet was developed by a trio of University of Toronto computer scientists who were entering an academic competition called ImageNet. The organizers of the competition had scraped the Internet and assembled a corpus of 1 million images—each of which was labeled with one of a thousand object categories like "cherry," "container ship," or "leopard." AI researchers were invited to train their machine learning software on some of the images and then try to guess the correct labels for other images the software hadn't seen before. Competing software chose five possible labels out of the thousand categories for each picture. The answer was judged successful if one of these five labels matched the actual label in the data set.

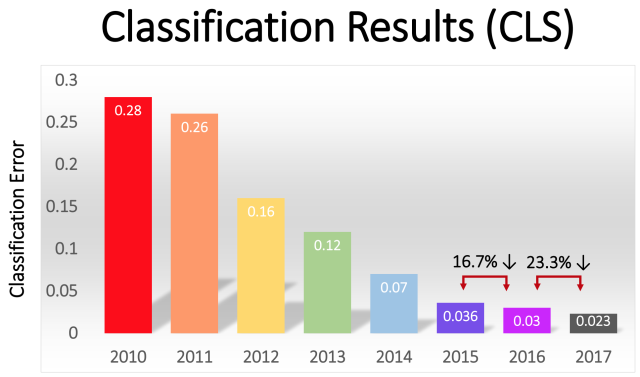

It was a hard problem, and prior to 2012 the results hadn't been very good. The 2011 winner had a top-5 error rate of 25%.

In 2012, the AlexNet team blew their rivals out of the water with a top-5 error rate of 16%. The nearest rival that year had a 26% error rate.

The Toronto researchers combined several techniques to achieve their breakthrough results. One was the use of convolutional neural networks. I did a deep dive on convolutions last year, so click here if you want the full explanation. In a nutshell, a convolutional network effectively trains small neural networks—ones whose inputs are perhaps seven to eleven pixels on a side—and then "scans" them across a larger image.

"It's like taking a stencil or pattern and matching it against every single spot on the image," AI researcher Jie Tang told Ars last year. "You have a stencil outline of a dog, and you basically match the upper-right corner of it against your stencil—is there a dog there? If not, you move the stencil a little bit. You do this over the whole image. It doesn't matter where in the image the dog appears. The stencil will match it. You don't want to have each subsection of the network learn its own separate dog classifier."

Another key to AlexNet's success was the use of graphics cards to accelerate the training process. Graphics cards contain massive amounts of parallel processing power that's well suited for the repetitive calculations required to train a neural network. By offloading computations onto a pair of GPUs—Nvidia GTX 580s, each with 3GB of memory—the researchers were able to design and train an extremely large and complex network. AlexNet had eight trainable layers, 650,000 neurons, and 60 million parameters.

Finally, AlexNet's success was made possible by the large size of the ImageNet training set: a million images. It takes a lot of images to fine tune its 60 million parameters. It was the combination of a complex network and a large data set that allowed AlexNet to achieve a decisive victory.

An interesting question to ask here is why an AlexNet-like breakthrough didn't happen years earlier:

- The pair of consumer-grade GPUs used by the AlexNet researchers was far from the most powerful computing device available in 2012. More powerful computers existed five and even 10 years earlier. Moreover, the technique of using graphics card to accelerate neural network training had been known since at least 2004.

- A million images was an unusually large data set for training machine learning algorithms in 2012, but Internet scraping was hardly a new technology in 2012. It wouldn't have been that hard for a well-funded research group to assemble a data set that large five or ten years earlier.

- The main algorithms used by AlexNet were not new. The backpropagation algorithm had been around for a quarter-century by 2012. The key ideas behind convolutional networks were developed in the 1980s and 1990s.

So each element of AlexNet's success existed separately long before the AlexNet breakthrough. But apparently no one had thought to combine them—in large part because no one realized how powerful the combination would be.

Making neural networks deeper didn't do much to improve performance if the training data set wasn't big. Conversely, expanding the size of the training set didn't improve performance very much for small neural networks. You needed both deep networks and large data sets—plus the vast computing power required to complete the training process in a reasonable amount of time—to see big performance gains. The AlexNet team was the first one to put all three elements together in one piece of software.

The deep learning boom

Once AlexNet demonstrated how powerful deep neural networks could be with enough training data, a lot of people noticed—both in the academic research community and in industry.

The most immediate impact was on the ImageNet competition itself. Until 2012, most entrants to the ImageNet competition used techniques other than deep learning. In the 2013 competition, the ImageNet sponsors wrote, the "vast majority" of entrants were based on deep learning.

The winning error rate in the competition kept falling—from AlexNet's already impressive 16% in 2012 to 2.3% in 2017:

The deep learning revolution quickly spread into industry, too. In 2013, Google acquired a startup formed by the authors of the AlexNet paper and used its technology as the basis for the image search function in Google' Photos. By 2014, Facebook was touting its own facial recognition software based on deep learning. Apple has been using deep learning for iOS' face recognition technology since at least 2016.

Deep learning has also powered recent improvements in voice recognition technology. Apple's Siri, Amazon's Alexa, Microsoft Cortana, and Google's assistant all use deep learning—either for understanding users' words or for generating more natural voices, or both.

In recent years, the industry has enjoyed a virtuous circle where more computing power, more data, and deeper networks reinforce one another. The AlexNet team built its network using GPUs because they offered a lot of parallel computing power at a reasonable price. But over the last few years, more and more companies have been designing custom silicon specifically for machine learning applications.

Google announced a custom neural network chip called a Tensor Processing Unit in 2016. The same year, Nvidia announced new GPU hardware, called the Tesla P100, that was optimized for neural networks. Intel responded with an AI chip of its own in 2017. In 2018, Amazon announced its own AI chip, which would be available for use through Amazon's cloud services. Even Microsoft has reportedly been working on AI chips.

Smartphone makers have also been working on chips to enable mobile devices to do more neural network computations locally rather than having to upload data to a server for analysis. Such on-device computations reduce latency while also improving user privacy.

Even Tesla has gotten into the custom chip game. Earlier this year, Tesla showed off a powerful new computer optimized for neural network calculations. Tesla has dubbed it the Full Self-Driving Computer and has positioned it as a key part of the company's strategy to turn the Tesla fleet into autonomous vehicles.

The wider availability of AI-optimized computing power has driven a growing demand for data to train increasingly complex neural networks. This dynamic is most obvious in the self-driving car sector, where companies have gathered millions of miles of data from real roads. Tesla is able to harvest such data automatically from customers' cars, while rivals like Waymo and Cruise have paid safety drivers to drive their cars around on public roads.

The thirst for data gives an advantage to big online companies who already have access to large volumes of user data.

Deep learning has conquered so many different fields because it is extraordinarily versatile. Through decades of trial and error, researchers have developed some generic building blocks for performing common machine learning tasks—like the convolutions that enable effective image recognition. But if you have a suitable high-level network design and enough data, the actual training process is straightforward. Deep networks are able to recognize an extraordinary range of complex patterns without explicit guidance from human designers.

There are limits, of course. For example, some people have flirted with the idea of training self-driving cars "end to end" using deep learning—in other words, putting camera images in one end of the neural network and taking instructions for the steering wheel and pedals on the other side. I'm skeptical that this approach will work. Neural networks have not yet demonstrated the ability to engage in the kind of complex reasoning required to understand some roadway conditions. Moreover, neural networks are a "black box," with little visibility into their internal workings. That would make it difficult to evaluate and verify the safety of such systems.

But deep learning has enabled big performance leaps in a surprisingly wide range of applications. We can expect more progress in this area in the years to come.

"how" - Google News

December 02, 2019 at 08:00PM

https://ift.tt/2sxJ0ZP

How neural networks work—and why they’ve become a big business - Ars Technica

"how" - Google News

https://ift.tt/2MfXd3I

Bagikan Berita Ini

0 Response to "How neural networks work—and why they’ve become a big business - Ars Technica"

Post a Comment